Key Takeaways: Alert Noise Reduction

- Alert noise is caused by non-actionable, repetitive, or low-impact alerts that distract teams from real incidents.

- Alert noise reduction focuses on improving signal quality—not just reducing alert volume.

- Excessive noise leads to alert fatigue, slower incident response, and operational risk.

- Common causes include static thresholds, duplicate alerts, poor severity classification, and lack of correlation.

- Effective techniques include dynamic thresholding, deduplication, correlation, suppression during maintenance, and context enrichment.

Modern IT environments are noisy.

The sheer volume of telemetry data coming forth every second from microservices, hybrid clouds, and containerized applications is just extraordinary. In IT Operations, NOC teams, and Site Reliability Engineers (SREs), this data is crucial, but only if it can be acted upon. When it’s not like this, everything becomes a background noise. If your team is more inclined not to give notifications proper thought than it is to address the problems it has got, suddenly we’re given an alert noise issue.

This is more than just a nuisance; this is an operational risk. Signals that may be critical are buried under streams of low-priority warnings, which result in long response times and possible downtime.

Alert noise reduction is the applied process of filtering out noisy or irrelevant data so as to have every alert that needs human attention not only act but also be timely and rich context. It is worlds apart from just “notifying less.” The aim is not silence; it’s clarity. With implementation of strategic elimination techniques, organizations can change their monitoring from being an area for chaos into one of trust as well.

What Is Alert Noise?

In the context of IT operations, alert noise refers to any notification that distracts an operator from important tasks without providing actionable value. This means that an operator does nothing to assist them in accomplishing essential tasks. Thus, it stands against the gap between total alert volumes (created by your monitoring systems), and those that one must be involved in making. If you would expect a monitor to sound the alarm on the spot with a CPU at 90%, although to be honest the only reason is because of sometime scheduled or not planned to come back to zero two minutes from now, it’s noise. While it’s technically correct, it diverts the engineer’s eye functionally.

Typical alert noise might be something like:

Repetitive Alerts: Any one problem bringing the same thing back one minute in and one minute out until something good is said to have done. Low-Impact Threshold Breaches: alerts for metrics that are slightly out of bounds but have no impact on user experience or service level agreements (SLAs).

Cascading Alerts: When one of the core components (such as an underlying database) goes down and every dependent application generates its own unique alert, leading to a “storm” of alerts for one underlying cause.

False Positives: Alerts sent during the performance of temporary network failures or misconfigured sensors that did not indicate that a legitimate issue is present.

With the rise of infrastructure complexity, alert noise is an ever more challenging problem to solve. Without intervention, the noise scales linearly with the infrastructure, soon exceeding the capacity of the human body to respond.

What Is Alert Noise Reduction?

Alert noise reduction refers to the systematic tuning of monitoring systems and alert logic to reduce non-actionable notifications. It’s not a change in configuration but an iterative process, going back and forth throughout the entire lifecycle of service management. Ultimately, this practice is based on the study of patterns in alerts, the recognition of specific sources of noise, and the application of particular logic (e.g., deduplication, suppression, or correlation) to refine the flow of information reaching the on-call engineer.

The ultimate goal of alert noise reduction is to improve the signal-to-noise ratio. The assurance that alarms mean the real trouble or malfunction enables teams to keep confidence in the alert handling system.

This transition allows for quicker incident response and greater system reliability where engineers will spend their cognitive resources on solving difficult problems rather than sorting through an inbox full of spam.

Ignoring alert noise has tangible costs for the business and the engineering team. Implementing a robust reduction strategy delivers benefits across several key operational metrics.

Reduced On-Call Fatigue

When engineers are woken up at 3 AM for a non-critical issue, their ability to function the next day is compromised. Persistent noise leads to burnout, high turnover, and a state of learned helplessness known as alert fatigue. Reducing noise protects the mental health and productivity of the team.

Faster Mean Time to Resolution (MTTR)

In a noisy environment, identifying the root cause of an outage is like finding a needle in a haystack. By eliminating distraction, irrelevant alerts, responders can immediately identify the critical failure. This clarity significantly shortens the time between detection and resolution.

Improved Trust in Alerts

If a monitoring system cries “wolf” too often, engineers eventually stop listening. They might mute channels, ignore notifications, or assume a new alert is “probably just a glitch.” Noise reduction restores trust. When the pager goes off, the team should know it’s real and requires immediate attention.

Better Service Reliability

With fewer distractions, SREs and Ops teams can focus on proactive reliability engineering rather than reactive firefighting. This shift allows for better capacity planning, architecture improvements, and performance tuning, ultimately leading to a more stable service for end-users.

Reduced Operational Cost

Every alert consumes resources, and, most expensively, engineering time. Investigating thousands of false alarms annually represents a significant financial waste. Reducing noise streamlines operations and ensures resources are allocated to value-generating activities.

Common Sources of Alert Noise

To reduce alert noise effectively, you must first understand where it originates. While every environment is unique, noise generally stems from five common configuration pitfalls.

If you want to work on reducing alert noise, you have to first be familiar with where it comes from, as in, the reason alert noise happens. All environments are different, but noise typically results from five common configuration pitfalls.

1. Static Thresholds

These fixed numbers (e.g., “Alert if latency > 200ms”) ignore normal variance. Novel traffic patterns during busy times or batch processing work can set static thresholds without reporting an issue and it can produce a massive amount of noise.

2. Infrastructure-centric alerts without service context

The alerting of raw infrastructure metrics—for instance high disk usage on a particular node—often fails to put things in context. If that node is in a self-healing cluster, which automatically spins up a replacement, then the alert has no relevance. Alerts that reject the proper state of the real service are among the biggest contributors to the noise.

3. Duplicate Alerts in Dependent Systems

In microservices architectures, one service has close interconnection to another. If there’s a payment gateway failure, the checkout service, inventory service and the frontend app might all fail health checks. With nonreciprocal relations, this would lead to three or four separate notifications of an event for a single incident.

4. Lack of Correlation

Like dependency problems, without co-occurrence events are treated as separate events. A network switch failure may elicit alerts for “server unreachable” for 50 different servers. If these data can’t be tied to a single “switch down”, there is no way to manage the noise volume.

5. Poor Severity Classification

“Critical” or High-grade categorizations of every alert defeats the goal with severity levels. If 80% maximum capacity on a disk induces the same urgency for a site-wide outage, engineers cannot prioritize the system as well. Misclassification makes informative data a noise and an urgent noise.

Core Techniques for Alert Noise Reduction

Reducing noise requires a layered approach. Teams should apply the following alert management techniques to filter out distractions while preserving critical data.

1. Dynamic Baselines and Threshold Tuning

Shift away from the non-stratified, arbitrary thresholds. Analyze historical numbers to know what “normal” looks like for your systems. Set threshold values similar to actual failure states. What’s more, use dynamic baselining (common in most contemporary monitoring software) for dynamic thresholds, where algorithms are used to define thresholds based on historic trends and time-of-day seasonality in the data.

2. Alert Deduplication

Deduplication is when identical alerts are combined into a single notification. If a check gets checked every minute and fails for 60 minutes, you should get one alert, which is “Check failed (x60)” instead of 60 separate emails. That cuts down inbox clutter dramatically when incidents go on for prolonged periods.

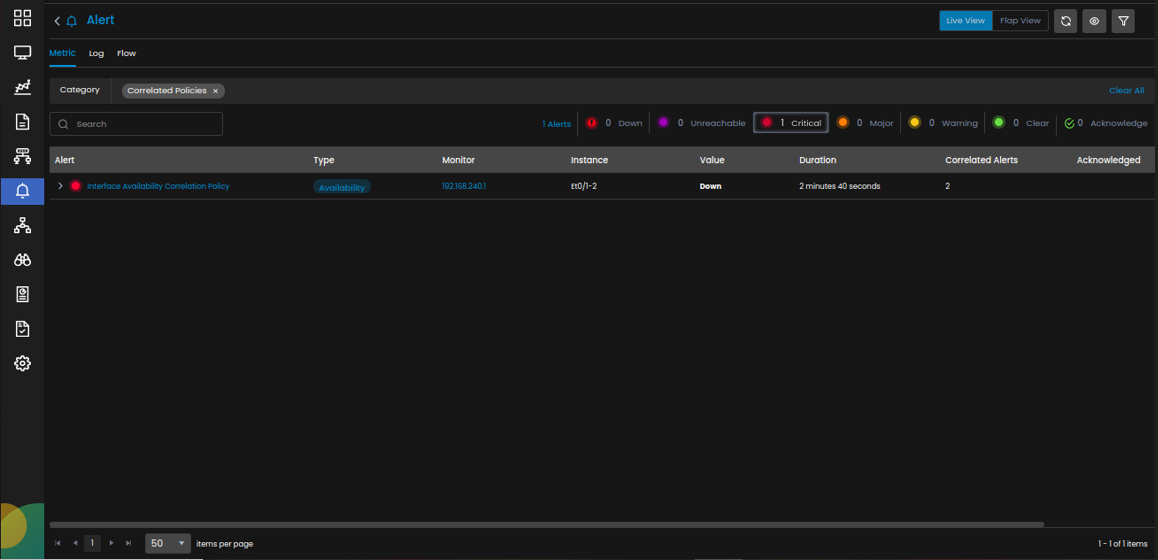

3. Correlation and Grouping

This method tends to create associations that are grouped depending on the time or topology, or the logic. For instance, if errors in the database are reported by the application that is connected to the database within seconds, those two activities should be collapsed into an incident. This methodology provides a holistic view of the challenge instead of examining disparate components to a single issue.

4. Suppression During Maintenance Windows

In the case of patching a server or deploying code, you can anticipate services restarting or latency running out. Set up suppression windows, so these normal behaviors don’t set off pagers, so the alert stream remains clean for legitimate anomalies.

5. Symptom rather than Root Cause Filtering

Limit the alert to symptoms you believe disturb your user (e.g., “Checkout failed”) instead of every root-cause point in time (e.g., “Database CPU high”). While root cause metrics are critical for debugging, they don’t necessarily need to page a human at once. Symptom alerting ensures that you are responding to the actual user impact.

6. Context Enrichment

For alerts, richer metadata (logs, last deployment tags, links to runbooks, etc.) is added right out of the notification itself. Though it doesn’t reduce the number of alerts by absolute definition, it decreases the cognitive noise accompanying each alarm. An engineer doesn’t have to hunt for context; it’s already there, and the alert is actionable.

Alert Noise Reduction vs. Alert Fatigue

The difference between alarm noise and alert fatigue

The distinction between the problem and the symptom is critical. The technical problem is the so-called alert noise: the excessive volume of non-actionable notifications. The human consequence of that alert noise is called alert fatigue: the desensitization and exhaustion experienced by the people receiving those notifications.

Reducing alert noise is the preventative cure for alert fatigue. Fatigue doesn’t just get solved by telling engineers to “pay more attention” or switching schedules more frequently. You fix it by attacking the source—the noise itself. Treat noise reduction as an engineering constraint; if the noise is high, reliability work cannot proceed until it is addressed.

Role of Monitoring and Observability in Reducing Alert Noise

High-quality alerting relies on high-quality data. You cannot build a quiet, effective alerting strategy on top of poor monitoring.

Better Signal Quality

Monitoring platforms offer granular data beyond the typical up/down verification. By watching the “golden signals” — latency, traffic, errors, and saturation — teams can build alerts which are markedly more a sign of actual health issues than raw infrastructure stats.

Context form Logs and Traces

To determine if an alert is actionable, systems need context. Observability applications that combine metrics with logs and distributed traces make it easier for alert logic to be smarter. For example, an alert may only result if the latency is high and the error logs contain data indicating a spike of 500-level responses.

Intelligent Alerting

Modern observability platforms make it possible to smart alert. They can ingest massive amounts of data and implement logic that is not humanly configurable, such as a detection system that automatically adjusts to changes in traffic patterns for anomaly detection which can further suppress noise.

Monitoring Platforms that help alleviate alert noise for your alert. The right alerts tools are required at scale These techniques are important. Most of this technology falls into four basic categories in this respect.

Monitoring Platforms

There are still those simple, first-level monitoring tools which take the first measurements and the logs. Contemporary platforms in this space typically come with built-in features in the form of dynamic thresholding and basic alert damping (e.g., “only alert if the condition continues for 5 minutes”).

Event Correlation Engines

These tools place themselves between your monitoring systems and your alerting platform. They analyze streams of events from different sources and correlate them using topology mapping or temporal logic. They are indispensable for determining if the network outage and application timeout have been the same incident.

AIOps Platforms

Artificial Intelligence for IT Operations (AIOps) goes a step further by applying machine learning. AIOps platforms learn relationships among various parts of your infrastructure over time. They can forecast possible incidents and automatically group noisy alerts, making them into alerts without entering into manual rules to generate alerts (without the need for manual rules; meaning they automatically reduce the administrative burden of noise reduction considerably.

Alert Management Systems

These are the last gatekeepers (e.g., PagerDuty, OpsGenie). They manage routing, escalation, and on-call schedules. Advanced features in these systems allow “wait periods,” or the identification of “transient alerts,” that resolve themselves on their own without ever paging a human.

Best Practices for Sustainable Alert Noise Reduction

Achieving a quiet alerting environment is a journey, not a destination. To make noise reduction sustainable, follow these operational best practices.

1. Do not rush to solve every problem.

Determine the services earning more revenue or having the most rigorous SLAs. Your noise reduction attempts should therefore first target there. The improvement of the alerts in your checkout process is much greater than resolving the alerts of an internal dev environment.

2. Review Alerts After Incidents

Incorporate alert review into your after-incident review (post-mortem) routine. Ask questions like: Did we get alerted? Was the alert timely? Did we get alerted for things that didn’t matter? Employ this feedback loop to recalibrate thresholds on the fly.

3. Engage On-Call Engineers

Those who carry the pagers have the most accurate data about what noise is. Enable them to silence or refine alerts. If an engineer labels an alert “not useful,” investigate the reason and correct the logic.

4. Continuously Refine Alert Logic

Infrastructure changes. Code changes. So your alerting logic has to change as well. Schedule regular ‘alert gardening’ sessions to review the top 10 noisiest alerts from the previous month and decide whether to tune, delete, or keep them.

5. Measure Alert Quality, Not Quantity

You don’t just have to record the number of alerts you receive. Keep an eye on the “Actionable Rate”—how many alerts resulted in a ticket and/or a fix. However, if your Actionable Rate is 10%, you have a 90 percent noise issue. Try to boost this percentage steadily upwards as you go along.

Common Mistakes to Avoid Alert Noise Nuisance

In the rush to quiet the pagers, teams can make mistakes that introduce risk.

1. Silencing Alerts Permanently:

It is tempting to simply delete a noisy alert. However, if that alert covered a genuine failure mode, you are now flying blind. Always replace a noisy alert with a better one (e.g., a tuned threshold) rather than removing it entirely.

2. Over-Automation Without Oversight:

Using “black box” AIOps tools to suppress alerts automatically can be dangerous if you don’t understand the logic. Ensure you have visibility into what is being suppressed to avoid missing critical incidents.

3. Ignoring Alert Reviews:

Setting up noise reduction rules and then forgetting about them leads to “drift.” As systems evolve, old suppression rules might hide new problems.

Treating Noise Reduction as a One-Time Task: Cleaning up alerts is not a “spring cleaning” activity. It is a daily operational habit. If you stop tuning, the noise will return.

Conclusion

Alert noise reduction is fundamental to modern IT operations. It transforms monitoring from a reactive burden into a proactive asset. By distinguishing between true signals and distracting noise, organizations can protect their teams from fatigue, speed up incident resolution, and ultimately deliver a more reliable experience to customers.

However, this requires a shift in mindset. It demands treating alert configuration with the same rigor as application code, subject to review, testing, and continuous improvement. As operational environments grow more complex, the ability to manage alert noise will become a defining characteristic of high-performing engineering teams.

If alert noise is slowing down your IT teams, it’s time to move beyond traditional monitoring. Our Unified Observability Platform and IT operations solutions help organizations reduce alert noise, correlate events, and prioritize incidents automatically, enabling teams to focus on real issues instead of chasing false alarms.

Explore how Motadata can help you transform monitoring into actionable intelligence and build resilient, noise-free IT operations.

FAQs

Alert noise reduction is the process of filtering, correlating, and prioritizing monitoring alerts so IT teams receive only actionable notifications. This helps improve incident response efficiency and reduces operational fatigue.

Excessive alerts overwhelm teams, causing delayed responses and missed critical incidents. Effective alert noise reduction improves incident management by ensuring teams focus only on high-impact issues.

AIOps platforms use machine learning and event correlation to group related alerts, detect anomalies, and suppress redundant notifications, significantly reducing alert volume.

Alert tuning ensures monitoring tools generate meaningful alerts aligned with business impact, preventing unnecessary escalations and improving overall IT operations performance.

Yes. Faster incident resolution and fewer service disruptions directly improve system reliability and customer experience, making alert optimization a key factor in operational success.