Amazon Web Services is a comprehensive, well-supported cloud service that is continuously growing and evolving. As cloud technology continues to boom, enterprises all across the globe are increasingly depending on cloud service providers to manage their workloads, data, and applications.

With digital transformation at its peak more so now, the organizations are increasingly transitioning their IT infrastructure to the cloud and AWS remains the most sought-after service with its diverse product portfolio.

Since Amazon Web Services (AWS) has emerged as the dominant player with a 30% market share, the need of the hour for organizations has shifted to prioritizing security which means investing in an AWS monitoring solution that complements existing AWS security tools, satisfies the requirements of the AWS shared responsibility model and enables best practices for AWS monitoring.

The need for AWS monitoring ensures security, high performance, and proper resource allocation.

Before delving deeper into the best practices for AWS monitoring, we should first be aware of the key metrics that need to be monitored.

The below metrics provide a foundation for understanding the performance and availability of your deployed applications.

What is AWS Monitoring?

AWS monitoring refers to keeping an eye on your Amazon Web Services (AWS) cloud system to ensure everything is running smoothly.

It involves checking how well your resources are working, such as how much CPU power or memory they are using, how much data they are sending over the network, and how much storage space they have left.

The aim is to catch any problems early on, before they become significant, and ensure your AWS setup is working efficiently.

Lear more in detail – AWS Monitoring

Important Of AWS Monitoring Metrics to Watch:

1. CPU Utilization:

It measures the percentage of allocated compute units currently being used. If you see degraded application performance alongside continuously high levels of CPU usage then the CPU may be a resource bottleneck.

Tracking CPU utilization across, as part of your AWS monitoring module, can also help you determine if your instances are over or undersized for your workload.

Application Status Check: These checks provide visibility into your application health, and also help you determine whether the source of an issue lies with the instance itself or the underlying infrastructure that supports the instance.

2. Latency:

High latency can indicate issues with network connections, backend hosts, or web server dependencies that can have a negative impact on your application performance.

3. Memory Utilization:

Tracking memory utilization can help you determine if you’ve adequately scaled your IT infrastructure. If memory utilization is consistently high, you may need to update your task definitions to either increase or remove the hard limit so that your tasks continue to run.

4. Disk Utilization:

Tracking disk utilization can help you determine the disk capacity on a node’s storage volume, which can be set up differently depending on whether you are deploying pods.

Like memory utilization, if there is low disk space on a node’s volume, Kubernetes will attempt to free up resources by evicting pods.

5. Swap Usage:

Swap Usage measures the amount of disk used to hold data that should be in memory. You should track this metric closely because high swap usage defeats the purpose of in-memory caching and can significantly degrade application performance.

Now that we are aware of the key metrics to be monitored, it is important to understand the best practices around AWS monitoring to employ better risk management and use its output to foresee and prevent potential problems.

AWS Monitoring Services

- Amazon CloudWatch: A monitoring service that provides real-time insights into resource utilization, application performance, and operational health of AWS resources, helping you troubleshoot issues and optimize performance.

- AWS CloudTrail: A service that logs and monitors API activity in your AWS account, enabling you to track user and resource activity, simplify compliance auditing, and troubleshoot operational issues.

- AWS Certificate Manager: A service that simplifies the process of provisioning, managing, and deploying SSL/TLS certificates for your AWS-based applications, making it easy to secure your websites and services.

- Amazon EC2 Dashboard: A web-based console that provides a centralized view of your Amazon EC2 instances, allowing you to monitor their performance, track resource utilization, and quickly identify any operational issues or bottlenecks.

Best Practices for AWS Monitoring

1. Lay down priority on monitoring elements:

In simpler terms, it’s important to focus on specific areas critical for your application’s smooth operation when it comes to monitoring your AWS environment.

Regularly monitoring these hotspots can catch any potential issues before they become big problems.

Creating a culture of regular monitoring means making it a routine practice for your team. By doing so, you can troubleshoot problems and check the overall health of your system during everyday work.

This is especially important for online businesses that need to comply with regulations. Regular cloud monitoring helps ensure that you meet those compliance requirements as well.

In summary, prioritize monitoring the critical parts of your AWS setup to avoid last-minute issues. Make monitoring a regular practice, so you can catch problems early and comply with regulations if needed.

2. Automation is a game-changer, use it where ever possible:

For IT teams working to maintain their AWS environment and manage multitude of resources within a complex enterprise IT infrastructure, automated responses to alerts become important. Dynamic Automation in AWS monitoring will help organizations to:

Improve cloud productivity via dynamic configuration of services, such as increasing memory or storage capacity.

Avoid delays in issue resolution when the human response to an alert is hindered by access and permission restrictions.

Focus on remediating more critical issues by having minor tasks automated.

3. Resolve problems before they become critical at the organizational level:

The engineering team often tends to solve the problem via a temporary patch for the system and postpone the implementation for a proper fix.

This practice might have a severe downside since a minor unresolved issue may indicate an underlying IT infrastructure problem, eventually resulting in critical errors.

It could also create multiple layers of unmaintainable technical debt, leaving the team unable to respond quickly to issues, ultimately negatively impacting the end-user experience.

That is where the dynamic AWS monitoring approach pitches in to provide timely proactive solutions.

4. Creating predefined policies to determine priority levels:

Predefined policies regulate events and alerts created by AWS-based services and give you complete control of your deployed AWS environment applications.

This keeps your IT management from being loaded with notifications and having almost 0 perpetual times to respond.

Having said that, priority levels will help you build a more sophisticated alert processing system. With the right AWS monitoring solution in place, you can respond to these problems according to their priority level.

5. Set up real-time alerts:

When it comes to AWS monitoring, setting-up alerts is vital. Alerts help you get notifications during the time of incidents. Configure real-time alerts based on thresholds or anomalies for critical metrics.

This allows you to respond to issues and minimize downtime quickly. Ensure that you send alerts to appropriate stakeholders for timely action.

While you set up alerts, ensure that you define accurate thresholds depending on the specific needs of your infrastructure. Setting thresholds too high or too low can negatively affect your monitoring system.

Setting thresholds too high can trigger unnecessary alerts for non-critical situations, leading to alert fatigue. On the other hand, setting thresholds too low increases the risk of missing critical issues that require immediate attention.

Establishing Efficient AWS Monitoring for your Business Needs

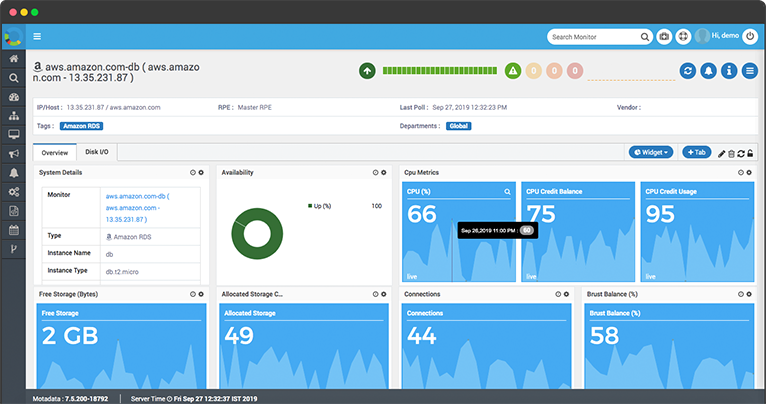

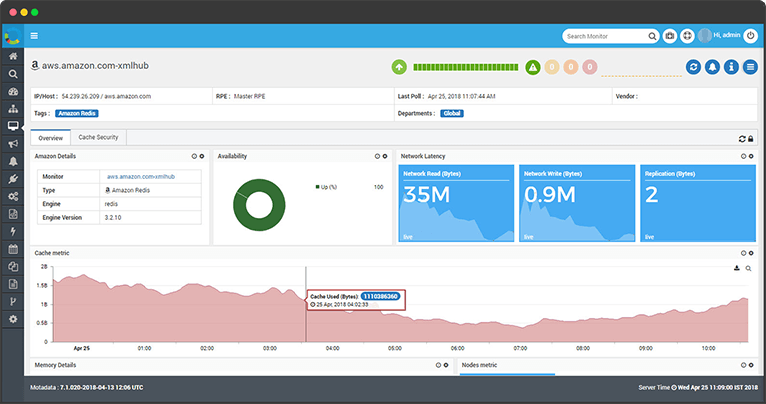

With Motadata NMS, get improved visibility with AWS monitoring product solution. You can monitor the performance of AWS hosted applications, get a drill down into each and every transaction, extract critical code-level details to resolve performance issues for your distributed AWS applications.

The solution helps to evaluate, monitor, and manage business-critical cloud-based (public and private) services, applications, and infrastructure on a constant basis.

The proactive cloud monitoring module including AWS monitoring instance yields metrics such as response time, availability, and frequency of use, etc. to make sure that the cloud infrastructure performs at acceptable levels, all the time.

To know more about how Motadata’s cloud monitoring platform provides a complete picture of the overall health of your cloud environment including all the nodes, users, and transactions from one dashboard, request a demo.

FAQs

AWS monitoring is important because it helps ensure your AWS resources and applications’ availability, performance, and security. It allows you to proactively identify and resolve issues, monitor resource utilization, and maintain compliance.

Some commonly used AWS services for monitoring include Amazon CloudWatch, AWS CloudTrail, AWS Config, AWS X-Ray, and AWS Health.

You can set up monitoring alarms in AWS using Amazon CloudWatch.

You can define alarm conditions based on metrics like CPU utilization or network traffic and configure actions that trigger when those conditions are met, such as sending notifications or automatically scaling resources.

To monitor multiple AWS accounts efficiently, you can use AWS Organizations. It allows you to centralize management and apply policies across multiple accounts.

You can optimize performance through AWS monitoring by analyzing the monitoring data and identifying bottlenecks or areas for improvement.

Additionally, you can use performance monitoring tools like AWS X-Ray to gain insights into application performance and identify areas for optimization.